I am not a fan of new years resolutions. If I had been, one of my new years resolutions would be to be better at writing down what I am doing all the time. However, I held some talks and did some fun experiments in 2017, so the cheap way is simply to link to those.

How to become a Data Scientist in 20 minutes (JavaZone 2017)

At Javazone 2017 I held a short talk on how building (drum roll) machine learning algorithms is actually pretty easy. Simply put: I build a regression model that would predict the fair price of a car, and then I explained how I used that model to actually buy the car. The main message was that as long as you do your model validation properly (dont train and test on same data), you dont really need to understand the algorithm to get good results (use random forest!).

We published both algorithm and the dataset here so that anyone could replicate it, and JavaZone even filmed it:

Algorithms we are using in FINN.no to recommend you good stuff

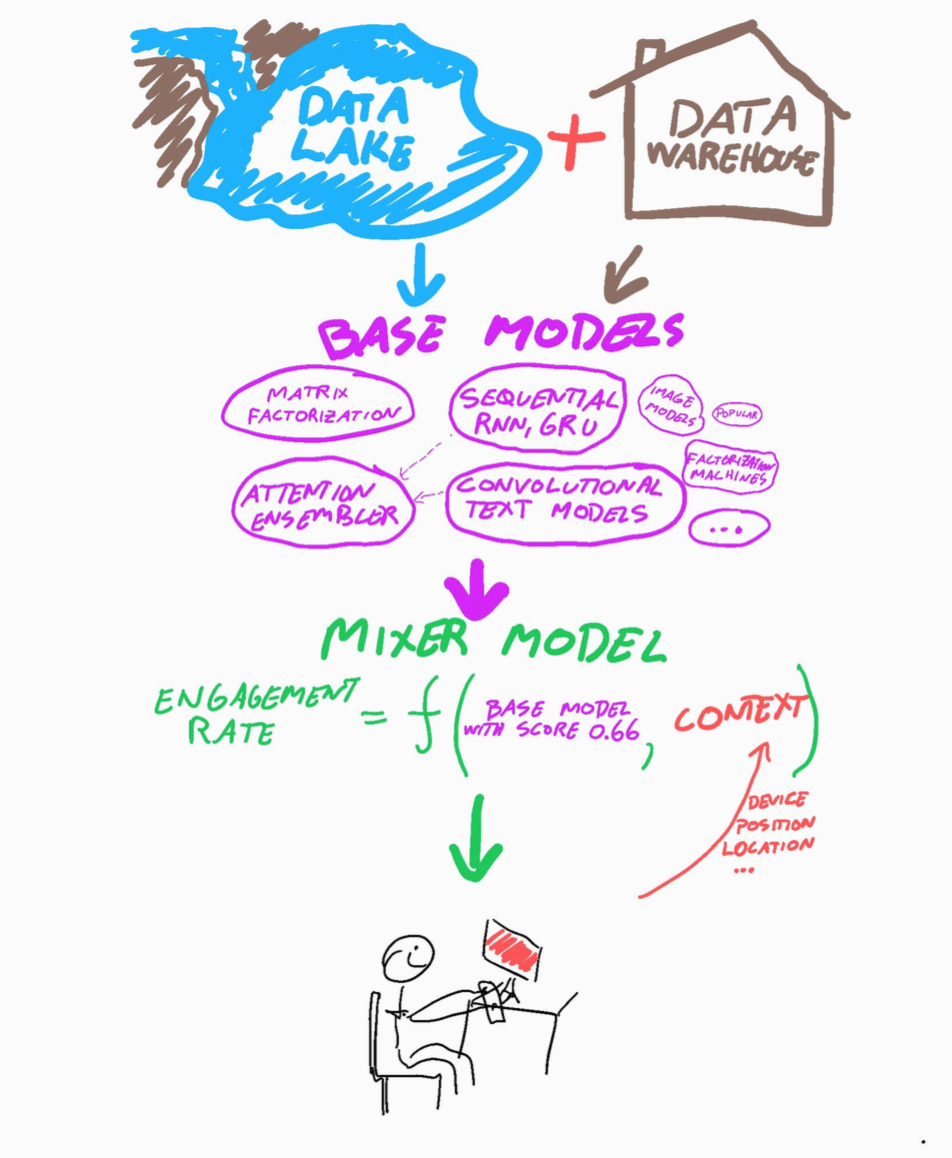

I have actually held three talks on this. First off was the one I held at Oslo Data Science meetup in February, where I talked about the journey we did from pure CF-models to using Tensorflow.

After that we have tried out a lot of things, and also moved to use keras instead, so we could skip all the boilerplate. Ive held two talks this fall on it: one beer-talk hosted by Bekk Consulting, and one early-morning talk with Bearpoint. The presentations were very similar, and can be downloaded here.

Testing RNN #recommenders for @FINN_tech. The RNN (bottom) generalize better when looking at last 10 items compared to only the last (mid). pic.twitter.com/feZMcz1n97

— Simen Eide (@simeneide) August 31, 2017

Workshop for FINN developers on Machine Learning

In November I held a 3 hour workshop with some colleages on machine learning. The idea was, just as in the javazone talk, to demystify building these models. We spun up GPU machines to all the participants, prepared a dataset many FINN ads and a baseline script on how they could classify the ads based on their title. It was around 500’000 ads spread over 20 or so categories. The task was then to understand the baseline algorithm, then improve it by changing architechture, learning rates, optimizers and so on. The baseline started by taking averages of the word2vec vectors of the title, but the winners used a GRU-layer on the title to snitch the last percentage points on the validation accuracy. That was really impressive, none of them had done machine learning before, and then they start tinkering with recurrent neural nets!

Data scientist beware! Sixty developers at @FINN_tech with no prior #MachineLearning experience just build a deep neural classifier in 2.5hrs that beat my model! pic.twitter.com/HmG29pi7mM

— Simen Eide (@simeneide) November 21, 2017

Clothing GANs

We had a little GAN-workshop at the end of the year here, where we among other things trained a model to generate new clothing. I was impressed how easy that was. Maybe FINN should start generating images of your ad if you cant be bothered to take your own photos? ;)

Do you need to sell off some clothes at @FINN_tech, but not happy with your own image? This is our first try at building a #GAN model that can generate arbitrary clothing images for you! Next steps: upscaling, conditioning and maybe a fashion show? pic.twitter.com/Vkd7takdHT

— Simen Eide (@simeneide) December 17, 2017

Neural Search Engines

Ive tried to work on models that take text input and outputs a finn ad (aka search engine). They were also enhanced with user-features, so that the search would be personalized to the exact user. It worked all right, but we are currently working on a “simpler” way to personalize FINNs search results, combining the good old search engine with our recommendation models.

Search engines are maybe not the most sexy, but it was really fun to learn a model to predict our #recommendation vectors based on a word! pic.twitter.com/yPDsLkKYxc

— Simen Eide (@simeneide) September 5, 2017

Self driving rc-car ++

Ive been tinkering a bit with a self driving car. The project is called donkeycar. Basically it is a rc-car that you can run through a python API with a raspberry pi. They have also integrated tensorflow, so that you can use imitation learning to drive a path. I got it to follow the road, and also a white line. Hopes was to spend enough time to build some reinforcement learning into it, but I haven’t had time (yet!).

Bil + nevralt nett = SELVKJØRENDE BIL! Kan jeg claime Norges første selvkjørende amatørbil?! #autonomousdriving #donkeycar #in #DeepLearning pic.twitter.com/vwZJcfLqLd

— Simen Eide (@simeneide) August 18, 2017

Our self driving project isnt fast, but atleast can go on forever. Driving using a single neural network on a raspberry PI #donkeycar pic.twitter.com/6y5sf8AnMi

— Simen Eide (@simeneide) October 28, 2017

How my #donkeycar is detecting road, grass and horizon for #autonomousdriving. Slow and steady progress.. pic.twitter.com/VlL3hGmcWn

— Simen Eide (@simeneide) September 1, 2017